In a complex software project, decoupling of modules is a necessary measure to avoid a huge spaghetti-like mess of inter-dependencies. We've all seen projects that attempt to break up functionality by modules, but where every module ends up importing almost all of the others.

Good ol' spaghetti code

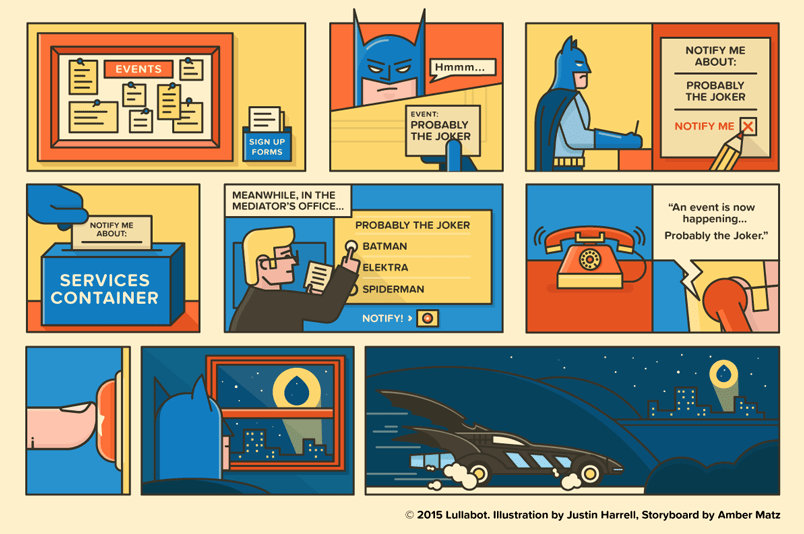

In this effort, event libraries are popular tools to use. By having certain actions trigger events, and then have other modules listening in to that event, one can avoid executing external module functions directly in the triggering code. It is also helpful for horizontally scaling your application, as the separation allows for several identical consumers to share the load of a high-intensity event queue. Event-driven architecture is well established, and is the basis for a lot of big applications today.

The main problem however, is that using events will not by itself reduce software complexity. You'll achieve code decoupling, but at an immediate cost: instead of directly being able to see what happens at a trigger, you'll need to globally search your codebase for listeners to that trigger. I've seen a lot of examples where triggers are consumed only one place, and in these cases they're not much more than glorified goto-statements (albeit with a convenient come-from marker). If you also introduce "listenToOnce" consumers, you're well on your way to muddle the execution plan in ways not even possible without triggers. Events, when implemented poorly, can really make your code hard to analyze.

Spaghetti code with events

When using events, you need to think with events. You need to apply events as an architectural tool when designing the software workflows. Good examples of events are:

- Business rules, e.g. a money transaction could trigger checking the transfer amount against a business rule that all large transactions should be marked for manual control.

- Peripheral input, e.g. listening for keypresses or input from external sensors.

- Process transfer, e.g. data collection done could trigger an event for a consumer service to process the collected data

- Pivotal events, i.e. conceptual events that might induce processes elsewhere. For example, triggering an event when the software config has been updated, to allow other modules to reload it.

A bad example could be: Your data loader module shows a loading spinner. It then starts loading gallery data, and upon completion fires the event "loaded:gallery-data", which is picked up by your gallery module. This, in turn, uses the loaded data to render items, and then fires an event "rendered:gallery", which is picked up by the data loader again to hide the spinner.

The problem here is the module separation, and the level of detail in your events. When every minuscule workflow goes across several modules, you might be better served with another module structure. The module structure in this example is most likely caused by trying to adhere to the principle of Single-Responsibility, where you'll have one module in charge of data loading, and one for displaying the gallery.

There is a need to compromise between single responsibility, and good encapsulation of functionality. A module might have one overarching responsibility, but its subclasses deal with the separate concerns needed for the module to function.

In closing, events are an architectural approach, not just a tool to insert whenever you need something to happen in another module. If you notice doing this, you've been thinking about decoupling too late.